To Stay or Not to Stay in R&D Mode…

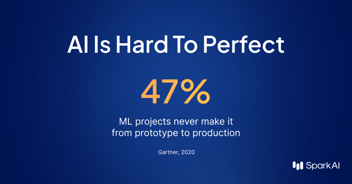

Recently, we asked an important question in a short blog post: Why do AI projects fail? In it, we laid out how AI edge cases stifle AI progress. And, we detailed a variety of approaches to the edge case problem.

Today’s post highlights one of the more common approaches to edge cases: Staying in R&D (training) mode. We discuss the approach’s potential benefits, when it might appeal to you, and its drawbacks.

What staying in R&D mode is:

Staying in R&D mode means staying in the lab. Instead of releasing your AI system for real-world use, you continue training the model with more annotated data until (hopefully) the frequency and severity of edge cases decreases. (Whether you can ever completely eliminate them is a different question.)

Potential benefits:

Staying in the lab lets you focus on fine-tuning your model and resolving commonly known or easy-to-foresee edge cases. This is particularly important for safety critical applications (like autonomous vehicles) operating in highly unstructured environments, where real human life is at risk and the technology still isn’t quite there.

This may appeal to you if…

- You have an unlimited budget and no business pressure to launch your product

- You’re comfortable with no real-world results for long periods of time

- Revenue from the AI system isn’t crucial to your business (now or in the near-term)

- Waiting won’t sacrifice your industry position, market share, or expectations you have already set with your consumers

- Your system will perform infinitely better with additional training

Drawbacks of staying in R&D (training) mode:

- Where are the results? Staying in R&D mode means no revenue generation or ROI. Any AI business leader knows the downstream effects: Demoralizing the internal team. Losing investor confidence. Running out of operating budget. Chilling future internal investment. Ultimately, going out of business without a proven product in the market.

- The lab alone will never solve the edge case problem. In a controlled environment, your model will never get the experience it needs to confidently tackle the diversity of real world edge cases. Sheltering your AI product in the lab means you’re not seeing how it functions in customer environments. You’re not studying how and when it fails, and you’re not collecting the critical production data that you need to continue training your AI over time.

- The world doesn’t benefit from your product. Customers won’t get to experience your innovation, and that’s not what you promised them. People create AI products because they want to change the world, not sit on the sidelines.

- Hand your competitors the edge. While you wait, your competitors will release similar technology before you, hurting your chances of leading the market. This threatens your investment, while also guaranteeing hefty sums of additional R&D costs to catch up.

Success stories in data labeling have already proven that humans are a critical part of the AI equation at massive scale. While these services have helped build vast training libraries, the next million data points are not what will turn your ROI plateau into a hockey-stick. The bottom up approach of hard, honest ML development powered by training data, needs to be paired with a top-down approach that enables teams to deliver not just the features they’re currently working on, but the ones they hope to work on 5 years from now.

-1.png?width=352&name=10-22%20Removing%20Obstacles%20(1)-1.png)