The Power of Human Cognition in the AI Workflow. And How to Wield it.

The most innovative automation companies today are thoughtfully incorporating real-time human cognition in their AI workflows as a building block toward near-term commercialization. Two very divergent philosophies have emerged for how exactly this should be done. Only one of them is part of the future.

The elevation of deep learning to widespread commercial use over the past decade, and the step-change in performance that came with it, bred a (justifiable) overconfidence in how much could be achieved with AI and how soon. Remember: self-driving cars everywhere by 2020! A common belief was that with just a little more time, just a little more money, and just a little more data, the model would “get there.” We all soon realized this was not true.

There is a fundamental cognitive gap in how AI models are built and trained, and we are 2+ groundbreaking, once-in-a-decade innovations away from that not being the case. Until that cognitive gap is filled, AI needs human cognition to help it overcome the longtail of confusing situations it encounters in the open world (called edge cases) and perform well enough to be commercialized today.

But not everyone agrees on how AI decision-making and real-time human cognition should be intertwined, especially as it relates to robots. The two schools of thought look something like this:

- Approach 1: AI stays in control always. In moments of confusion, a human is called to provide lightweight contextual cues about the scene to help AI arrive at a confident decision on its own.

- Approach 2: AI relinquishes control. In moments of confusion, a human takes over and remotely pilots the robot or makes decisions in place of the AI system.

Across the industry, we’ve seen some companies champion the approach of starting with humans remotely piloting a vehicle (akin to Approach 2) and then “layering in the autonomy.”

It just doesn’t work that way. The idea of having humans remotely pilot a robot from thousands of miles away, and thinking of that as a step in the direction of autonomy is wrong. It’s not safe, it’s not scalable, and almost always does not lead to the desired outcome of incremental autonomy. Here’s why.

Safety

Robots come in all forms – from small plastic coolers on wheels, to multi-ton autonomous tractors. All of these robots are continuously ingesting information about their environments and making rapid decisions about what to do next. Avoid this obstacle, drive through that one, stop completely. Making the best, safest decision requires near-perfect situational awareness; context is everything. Translating that awareness remotely, and relinquishing decision-making control to a human a thousand miles away is a risk that cannot be overlooked. The smallest glitch in connectivity, or the slightest gap in awareness can be critical.

Further, by offloading safety critical functions to a human backstop, the functional safety case for the standalone autonomous system is never adequately validated and the engineering to support it never quite develops. This hinders those functions from ever being handed back to autonomy.

All of this is not safe.

Scalability

In order to deliver situational awareness to a human operator thousands of miles away, companies that have chosen this path now need to invest (heavily) in enabling it. This forces system architecture decisions that are optimized for the human remote operators, not the robot. Cameras and other sensors are disproportionately selected for their usefulness to humans. Those sensors are then physically mounted where they will help a human see better, not necessarily where they need to be for autonomy. Limited budgets only exacerbate this tradeoff.

To remotely pilot robots, human operators need specialized equipment – game-style joysticks, steering wheels, wrap-around monitors, etc. Expensive equipment like this needs to live somewhere, necessitating further investment in physical remote operations centers. Human operators need to familiarize themselves with this equipment, necessitating lengthy and specialized training, and constraining who can and cannot become an operator.

Further still, each “intervention” requires a human to be engaged with a robot for minutes at a time. Ensuring no robot is ever left unsupported necessitates an oversized human workforce and a human-to-robot ratio that looks a lot more like 1:1, than the 1:many you would have hoped for.

All of this costs money and is not scalable.

Alignment with your long term mission

But the most significant pitfall of having humans remotely pilot your robots is that it doesn’t align with your long term mission of building truly autonomous systems.

Leveraging humans in this way eliminates the discipline and urgency to architect an AI system that learns to solve problems on its own. Compare this to a child asking a parent for homework help. If the parent consistently feeds the answer, the child will never learn. And worse, the child will never build the skills to learn how to tackle other problems they encounter in the future. Similarly, instead of developing toward an AI system that can solve problems on its own, you end up with a system that gets really good at giving up and requires saving.

Compounding this problem is the understandable pressure to show progress. Autonomy is hard. If remote-piloting is part of your architecture, it’s easy to fall into the trap of overfitting your AI development to it. As you encounter challenges in development, it’s tempting to shift more responsibility away from AI and on to your human operators, especially if it means reaching your next customer demo or funding milestone. Inevitably this growing dependency diverts attention and resources. The tradeoff between investing in incrementally improving remote-piloting vs. investing in the longer-term endeavor of autonomy becomes harder to make, and so begins a vicious downward spiral. Resource allocation reflects company priorities and when autonomy stops being something you meaningfully invest in, it takes a back seat. For an autonomy company, that’s a dangerous trajectory to be on. Instead of a temporary stepping stone toward autonomy, you end up with a permanent fixture that you suddenly can’t do without.

That may work for a time, but if the scaled up cost of operation doesn’t end up surprising you, then the competitor that did invest in autonomy will.

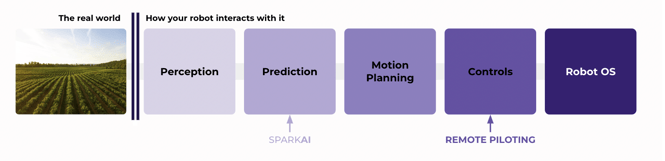

The on-robot stack. The deeper you inject human cognition (i.e., the farther away from where decisions are made and executed), the farther away you get from your path to autonomy.

Either you’re on the path to full autonomy, or you’re on the path to always having a human behind the wheel. You can’t have both. Neither is necessarily bad, you just need to be honest about what you’re looking to achieve through technology. Forklifts in warehouse settings are a great example of where human insight is required throughout the operation of the vehicle and can never (at least for now) be fully automated. Remote piloting could make sense here. In this setting, the role of technology is simply to take the human forklift operator out of the cab and move them to an office, which unlocks various cost and safety benefits. But it’s important to be clear – this is not autonomy.

The journey of autonomy comes down to figuring out how we humans can become better trainers; how we can teach our AI offspring everything we’ve learned from decades of life experience and millennia of human evolution. The answer will take time to reveal itself. Until then, we need a better way to bring robots and AI to life.

There is a better way

Today and for the foreseeable future, AI needs humans.

The right approach for engaging human cognition in the AI workflow is to regard it as nothing more than an input – a contribution that is presented to the AI system for consideration. Unlike remote piloting, which interfaces at the control level, this approach interfaces at the decision level. The human is not taking control, but is rather influencing a decision that the AI system always remains responsible for making. Here’s an example:

A delivery robot encounters an obstacle that it doesn’t recognize, and as a result, doesn’t know how to safely navigate. The robot sends an image of the obstacle to a human. The human easily recognizes that the unknown obstacle is actually a turned over trash can, and shares this input with the robot. The robot already knows how to navigate immovable obstacles. Applying the input it received from the human, the robot recognizes the trash can as an immovable object and safely navigates around it autonomously.

AI systems that are designed well for autonomy are intelligent enough. In moments of confusion, they don’t need someone to take control. What they need are the missing contextual cues that enable them to associate a new, unknown scenario with one they know how to handle on their own. Taking this approach offers multiple benefits:

It’s safe – The AI system remains in control. The robot considers input from humans alongside input from other sensors and its existing knowledge of the world. Decision authority remains with the robot, going as far as overriding the human input if it conflicts with what it believes to be the safest action in the moment and changing environment. This is especially important for safety critical functions.

It’s scalable – The user interface through which humans provide input can be simple, and accessible from standard home computers. No specialized equipment required. This also means training is more intuitive, widening the pool of eligible human participants. Further, human input through this method can be delivered in discrete units, in seconds, which means a relatively small workforce can support a large fleet of robots, maximizing cost efficiency.

It’s aligned with your long-term mission – Keeping your AI in control maintains the development emphasis squarely on improving its ability to make good decisions with whatever input is available. The resulting system architecture is agnostic to human input being available, and is therefore not dependent on it. Better still, through each interaction, knowledge and AI-ingestible ground truth data accumulates that can be used to improve the underlying AI model and to further decrease the reliance on human inputs.

Your vision of the future

For your product to have the impact you believe in, at the scale you envision, it must be capable of eventually making decisions entirely on its own. Incredible strides are being made across industry and academia to enable AI, over time, to think more like us. For the time being, AI still needs human input. Being thoughtful about how best to deliver it, specific to what your application requires, is the key to realizing your application’s full potential today and your vision of the future tomorrow.

–––

SparkAI is helping the world’s most ambitious automation companies bring AI products to life, with an API that makes it easy and radically cost-effective to instantly leverage real-time human cognition in live AI workflows. Learn more at spark.ai